AI Undress Apps: What You NEED To Know & Explore Now!

Is the allure of effortless image manipulation truly the future, or a descent into ethically questionable waters? The rise of "undress AI" apps and deepfake technology presents a significant challenge to our understanding of privacy, consent, and the very nature of digital reality.

The digital landscape is constantly evolving, and with it, the potential for both groundbreaking innovation and unforeseen ethical dilemmas. The emergence of artificial intelligence capable of removing clothing from images, often referred to as "undress AI," has ignited a complex debate. These tools, leveraging advanced AI models, promise users the ability to alter images, offering seemingly effortless transformations. However, the consequences of such technology are far-reaching, touching upon issues of privacy, consent, and the potential for misuse. The very idea of "nudifying" images, generating deepfake nudes, and undressing individuals without their knowledge or consent raises serious red flags. The technology, though presented as a means for artistic exploration or style experimentation, fundamentally alters the original intent of an image, raising concerns about its potential for harm, particularly for non-consenting individuals. The availability of these tools prompts a critical examination of the ethics and responsibilities surrounding their use.

Let's consider the potential impacts of this technology with a hypothetical scenario involving a public figure. Imagine a well-known actress, let's call her Anya Sharma. Her image is captured at a public event. Now, imagine that a malicious actor, utilizing an "undress AI" application, manipulates the image, removing her clothing and generating a deepfake nude. This altered image is then disseminated online. The potential damage to Anya's reputation, career, and personal life would be immense. The emotional distress and psychological harm caused by such an invasion of privacy can be devastating. In addition to the immediate impact on the individual, the proliferation of such deepfake images can also contribute to a culture of harassment and intimidation, further eroding trust and safety in the online environment. The ease with which such manipulations can be carried out, combined with the difficulty of detecting them, poses a significant threat to individuals' privacy and personal security.

Furthermore, the evolution of these technologies has ushered in a new era of visual manipulation. This poses challenges to traditional notions of truth and authenticity. The ease with which images can be altered creates an environment where distinguishing between reality and fabrication becomes increasingly difficult. This erosion of trust has implications far beyond personal privacy; it can also undermine trust in institutions and the media. Imagine the impact on investigative journalism, legal proceedings, or even political discourse if images can be easily doctored and manipulated, with the public struggling to discern what is real from what is not. The rise of deepfakes and "undress AI" underscores the urgent need for societal dialogue and the development of effective measures to safeguard against the harmful consequences of these technologies.

Let's consider the core mechanics behind these "undress AI" applications. These tools rely on complex algorithms, usually incorporating machine learning techniques. Users typically upload an image, and the AI model processes the image, identifying and attempting to remove clothing. The algorithms are trained on vast datasets of images, allowing them to predict and fill in the areas of the image that are "missing" due to the clothing removal. The "undressher app" and similar services often boast about their ability to generate "visually stunning outputs that preserve the authenticity of original images." However, this claim is often misleading. While some apps use artistic filters to enhance the final product, the very act of digitally undressing someone fundamentally alters the original image, irrespective of the sophistication of the processing techniques.

The promise of "trying out different styles and looks" or "removing clothes and items in your pictures in creative ways" often serves as a veneer to market the technology. Behind this veneer, there are ethical considerations, as well as serious legal ramifications. Consider, for example, the use of these tools in the context of fashion or product visualization. A designer could, in theory, use these apps to test clothing styles on a virtual model. However, the potential for misuse far outweighs any potential creative applications. The same technology could be used to create fake advertisements, spread misinformation, or even manipulate individuals by generating fake intimate images. Therefore, the conversation is not about "creative ways" but about responsible use, safety, and the need for robust regulations.

- Movies Streaming Guide Find Films Where To Watch Now

- Kannada Movies 2024 Latest Releases Box Office Reviews

The freemium business model, common among many "undress AI" applications, further complicates the matter. While some features might be available for free, the more advanced functionalities, such as higher-resolution output or access to sophisticated filters, often require payment. This creates a scenario where the most powerful tools, the ones that can generate the most realistic and potentially damaging deepfakes, are behind a paywall. This model also incentivizes the developers to create ever-more realistic images, as this is the element that will attract paying customers. This dynamic further fuels the ethical concerns and the potential for misuse.

The call to "explore the power of nudify AI" or "undress her within a few seconds" reveals the underlying motivations. These claims are designed to attract the attention of individuals who are looking to exploit or commodify the human form. The use of phrases like "make anyone deepnude" normalizes the act of generating non-consensual images. The promise of "getting nudes in seconds" prioritizes speed and efficiency over ethical considerations. This focus on instant gratification completely disregards the consent of the individual depicted. The message is clear: these applications are not merely tools for image editing; they are tools for creating and potentially spreading harmful content. They are frequently designed to generate and distribute content that will violate a person's privacy without their consent.

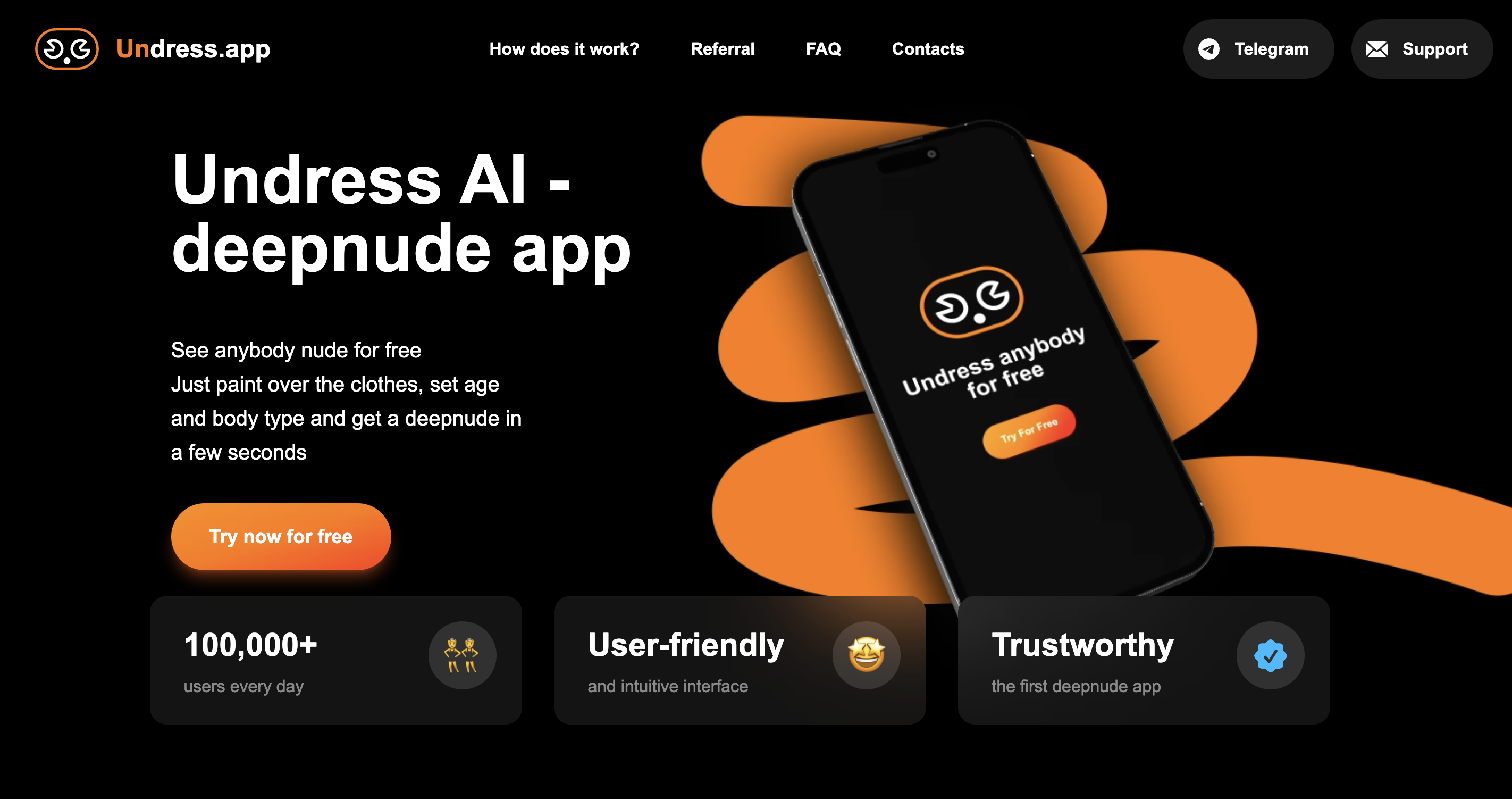

The ease of access is another contributing factor to the potential for harm. The applications are available on multiple platforms, from websites to mobile apps. Some of them even advertise themselves as "free generators." This accessibility makes the technology available to anyone with a smartphone and internet connection. The lack of robust safeguards and the ease of use further exacerbate the problem. The barrier to entry is very low, which means that the potential for misuse is very high. The widespread availability of these apps, along with their ease of use, makes it difficult to control the dissemination of the generated content. The result is that the potential for abuse is not limited.

The speed at which this technology is evolving presents a challenge. The continuous technological improvements mean that the generated deepfakes are becoming more and more realistic. The ability to remove clothing and create convincing nudes will only increase in sophistication in the years to come. This means that the challenge of detecting and preventing the misuse of the technology is also increasing. New methods for detecting and flagging these types of manipulations need to be developed in order to keep up with the constant evolution of this technology. The technology must evolve at a rate that helps society to understand the implications and develop guidelines for its use.

The industries that might be impacted include the media, legal fields, fashion, and even the entertainment industry. Deepfakes have the potential to damage reputations and spread misinformation, which is something that impacts any organization, company, or even a person, whose image is involved. In legal proceedings, deepfakes can be used to misrepresent evidence or even create false testimony. The fashion industry could see the use of deepfakes to showcase their product on models without consent. Therefore, all of the sectors will be affected by the emergence of undress AI.

The ethical discussion revolves around multiple key questions: Does the technology violate the right to privacy? Does the technology empower individuals to violate the privacy of others? Are the potential harms outweighing any potential benefits? Are the developers responsible for the use of their technology? What measures should be implemented to regulate the use of the tools? The debate is further complicated by the lack of legal frameworks. There are no specific laws regulating the use of "undress AI" applications. This ambiguity creates a legal grey area where perpetrators can operate with impunity. Legislation may be required to address the emerging challenges.

The development of deepfake detection tools is crucial to counter this technology. These tools can identify manipulated images and videos, which is one of the most effective ways to fight the spread of misinformation. The development of deepfake detection technologies needs to continue to evolve alongside the creation of the undress AI technology. The constant evolution of detection will help to ensure that the public is well-informed about the risks that they encounter when on the Internet.

The conversation needs to include a discussion regarding the implications of consent. All of these tools are creating images without consent. Individuals are not asked for their consent before their images are manipulated, and even if they are, the issue of trust and safety is a concern. The lack of consent is a fundamental ethical violation, which has implications that affect society as a whole.

The responsibility does not lie with the user, but with the developers. Developers should implement safeguards to prevent the misuse of their technology. They should also be transparent about the capabilities of their tools and the potential for harm. This transparency creates a culture of responsibility. Developers are also responsible for the potential harms arising from the use of their technology, even if they do not directly create the content.

In conclusion, the emergence of "undress AI" applications and the broader capabilities of deepfake technology represent a critical juncture. The potential for misuse is immense, and the ethical and legal implications are significant. A comprehensive approach is required to mitigate the risks, which should include legislation, the development of detection tools, and robust ethical guidelines for developers and users. The future of image editing and the protection of personal privacy depend on responsible innovation and a commitment to safeguarding against the harmful consequences of this technology.

Article Recommendations

- Hdhub4u Unveiling Risks Alternatives For Free Movies Tv Shows

- Watch Vega Streaming Options Where To Find It Online Now

Detail Author:

- Name : Ms. Anastasia Fay

- Username : ron.rolfson

- Email : yadams@hotmail.com

- Birthdate : 1971-12-04

- Address : 383 Manley Lock Mckenzieberg, DC 94528

- Phone : 1-707-291-3337

- Company : Roob, Stokes and Marks

- Job : MARCOM Manager

- Bio : Doloribus modi tenetur similique nihil eum ex dignissimos. Distinctio necessitatibus occaecati numquam ducimus. Blanditiis maiores error voluptatem enim voluptates consequatur numquam optio.

Socials

instagram:

- url : https://instagram.com/jaydon.turner

- username : jaydon.turner

- bio : Temporibus et sed id. Quis sunt sunt et ipsum beatae ab esse. Porro maxime qui rerum debitis.

- followers : 4258

- following : 476

tiktok:

- url : https://tiktok.com/@jaydon8440

- username : jaydon8440

- bio : Fugiat nesciunt quia fugit et. Ex voluptatum ullam alias numquam ut.

- followers : 805

- following : 1097