Deep Linking & AI Deepfakes: A Guide & Latest News

Has the digital world become a playground for malicious actors, and is anyone truly safe anymore? The recent surge in deepfake technology, targeting high-profile figures and spreading misinformation, highlights a chilling reality: the lines between truth and fabrication are rapidly blurring, and the consequences are far-reaching.

The speed at which technology evolves often outpaces our ability to understand its implications. Deepfakes, powered by artificial intelligence, allow for the creation of incredibly realistic, yet entirely fabricated, videos and images. These manipulated media pieces can convincingly depict individuals saying or doing things they never did, leading to a crisis of trust and a potential for significant harm. The issue has escalated significantly. This isn't just a technological curiosity; it's a weapon.

The alarming spread of deepfakes has raised concerns across various sectors, from entertainment to politics. The case of Rashmika Mandanna, who was targeted by a manipulated video, served as a stark warning. The deepfake video, seen millions of times across various social media platforms, underscores the potential for widespread dissemination and the difficulty in containing such content. It highlights the urgent need for robust detection and mitigation strategies.

- Movierulz 2025 Latest Telugu Movies Reviews More Discover Now

- How To Download Free Movies 2024 Guide Best Sites

The incident involving Ms. Mandanna is just one example. Others, including Priyanka Chopra Jonas and Alia Bhatt, have also been targeted by these malicious manipulations. The vulnerability of public figures to this type of attack underscores the importance of recognizing that the threat is not limited to any specific demographic or profession. Deepfakes can impact anyone.

Another victim of this technology is Kajol, a well-known Indian actress, faced a similar ordeal. The spread of a manipulated video featuring her sparked public outrage and underscored the need for enhanced data privacy and cybersecurity measures.

The rise of such technology raises critical questions about the authenticity of information and the erosion of public trust. The impact extends beyond individual victims; it undermines the very foundations of societal trust and the integrity of media consumption.

- Kannada Movies 2024 Latest Releases Box Office Reviews

- Carl Deans Passing Dolly Parton Mourns Her Husband Of 60 Years

Rashmika Mandanna

Rashmika Mandanna, a prominent actress in the Indian film industry, unfortunately became the victim of deepfake technology. This section delves into her background, career, and the impact of the deepfake incident.

| Category | Details |

|---|---|

| Full Name | Rashmika Mandanna |

| Date of Birth | April 5, 1996 |

| Place of Birth | Kodagu, Karnataka, India |

| Nationality | Indian |

| Occupation | Actress, Model |

| Known For | Her work in Telugu, Kannada, Tamil, and Hindi films |

| Debut Film | Kirik Party (2016) |

| Key Films | Geetha Govindam, Dear Comrade, Pushpa: The Rise, Varisu |

| Awards and Recognition | Several awards, including the Filmfare Award for Best Actress Kannada |

| Deepfake Incident | A manipulated video featuring her went viral, causing significant concern and raising awareness about the misuse of AI technology. |

| Official Website | IMDB Profile |

The deepfake incident involving Rashmika Mandanna highlighted the urgency with which these threats must be addressed. This particular incident and the resulting public discourse has amplified calls for legislative changes and robust monitoring of online content to curb the spread of misinformation and protect individual privacy.

The core of the issue lies in the ability to manipulate digital content with remarkable ease and realism. This allows malicious actors to create believable falsifications that can damage an individual's reputation, sow discord, and even influence public opinion. The fact that such attacks can be launched with relative anonymity and rapidly disseminated across various platforms exacerbates the challenge.

Understanding the mechanics of deepfakes is critical to effectively combating them. These manipulated media pieces often involve advanced techniques such as: face swapping, voice cloning, and body manipulation. These techniques, when combined, create extremely realistic forgeries that are often difficult to distinguish from genuine content.

The investigation into the Rashmika Mandanna deepfake case led to the tracking of suspects. Law enforcement agencies are working tirelessly to identify, and prosecute individuals involved in the creation and dissemination of manipulated content, aiming to provide a sense of justice and deter future attacks.

The issue of deepfakes also touches upon the ethical implications of artificial intelligence. As AI technology continues to evolve, the potential for misuse will increase. This demands a serious discussion about responsible AI development, ethical guidelines for content creation, and the legal ramifications of spreading false or misleading information.

One of the biggest challenges is the detection of deepfakes. The advanced nature of these forgeries makes it increasingly difficult to identify them, even with the aid of advanced detection tools. This necessitates the need for innovative solutions and collaborative efforts to improve detection and mitigation strategies. This also involves the education of the public to be more vigilant and critical consumers of media content.

The Anatomy of a Deep Hot Link and its Importance

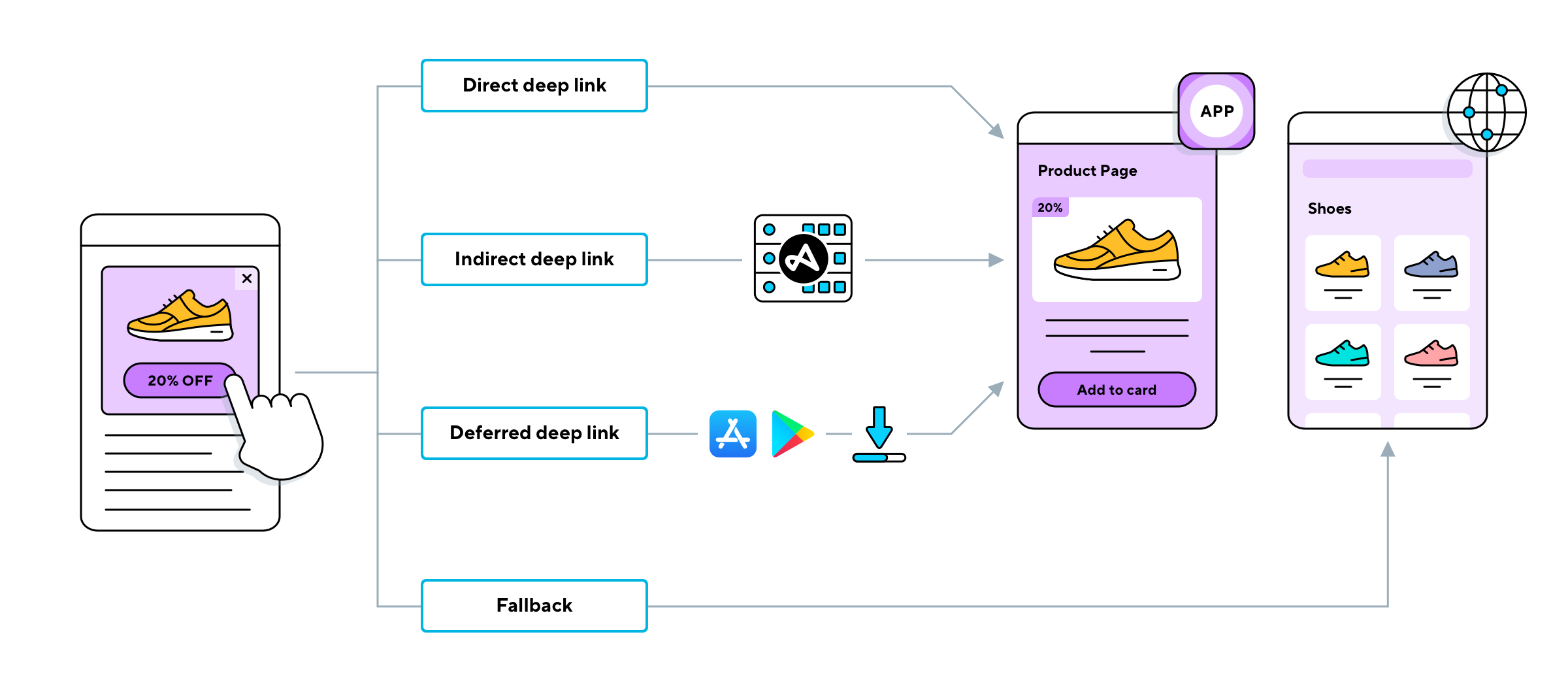

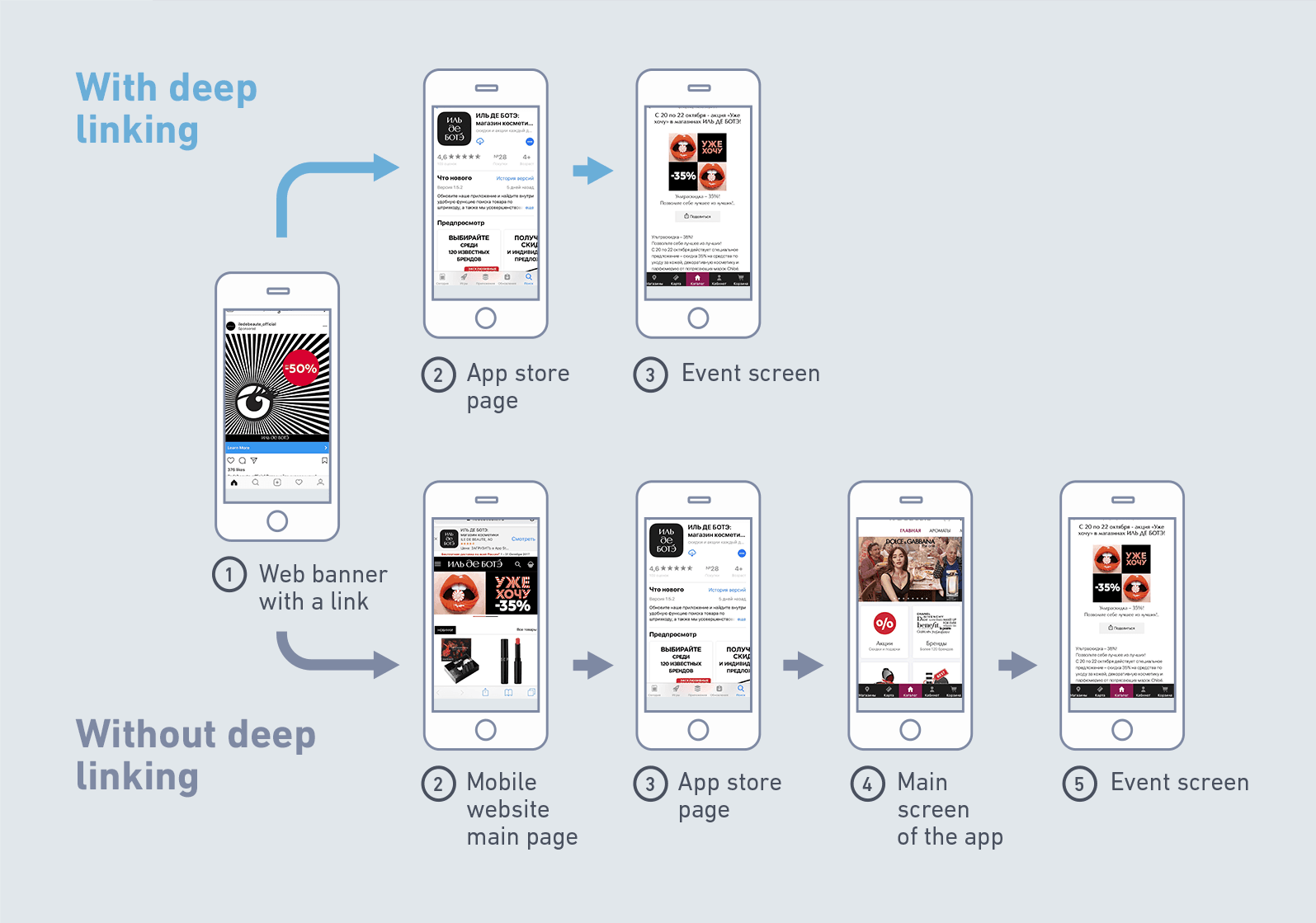

Consider for a moment the fundamental structure of the internet: a vast network of interconnected websites, each containing a multitude of pages, sections, and resources. Within this digital landscape, hyperlinks serve as the pathways that guide users from one point to another. While a standard hyperlink often directs a user to a website's homepage, a "deep hot link" offers a more precise approach.

Deep hot links, or "deep links," represent a targeted connection, directing users to a specific page or section within a website rather than the general homepage. This feature provides a streamlined user experience, allowing immediate access to the relevant content. To create these links, specific components, or characteristics, of a URL are needed.

The primary parts that make up a URL are:

| URL Component | Description | Example |

|---|---|---|

| Authority and Hostname | The domain name of the website. | www.example.com |

| Path | Specifies a particular page or content within the website. | /products/details |

| Query Parameters | Adds more specific criteria for content. | ?id=123&color=red |

| Anchor | Targets a specific section on a page | #section1 |

To build an effective deep link, a site owner will typically include the authority, hostname, and path to the specific content they wish to highlight. They may also incorporate query parameters or anchors to enhance the links precision.

The use of deep links can dramatically improve the user experience. By directing the user straight to the content they desire, site owners reduce friction, improve navigation, and provide a more engaging experience. This is especially beneficial when sharing articles or specific pieces of information.

For business campaigns, deep linking can serve as a key tool for driving conversions, as it allows for the targeted delivery of information that is particularly relevant to prospective buyers. Marketing campaigns using deep links are also likely to see an increase in conversion rates because they directly take the user to the intended destination. The user does not have to search for a relevant product or service. A buyer can access the desired content immediately.

Deep links help streamline marketing campaigns by directing users to specific product pages, promotional offers, or contact forms. This can be helpful when it comes to tracking the impact of a campaign. By using unique deep links for each campaign, marketers can easily monitor which links are driving the most traffic and conversions.

In the digital landscape, where user attention is precious, understanding and utilizing deep linking can set an organization apart. By providing a seamless and targeted experience, organizations can improve customer engagement, promote conversions, and provide better outcomes for the business.

The Broader Implications of AI-Generated Misinformation

The proliferation of deepfakes represents a broad attack on the foundations of trust and information in a digital age. It undermines public trust in media, institutions, and even individuals, and can be used to manipulate narratives, spread disinformation, and incite social unrest.

The ability to create convincing but fabricated content poses profound challenges for journalism, media, and public discourse. As it becomes increasingly difficult to distinguish between genuine and manipulated content, its also important for users to exercise heightened vigilance and critical thinking skills. Fact-checking, source verification, and cross-referencing become crucial practices.

The scale of the problem is considerable. The speed and ease with which deepfakes can be created and distributed amplify their potential for harm. Social media platforms and other online channels can be weaponized to spread misinformation rapidly, reaching vast audiences before the truth can be established.

The impact is especially devastating on the individuals targeted. The creation and distribution of deepfakes can lead to significant emotional distress, reputational damage, and even legal consequences. The experience is often traumatizing, forcing victims to grapple with the invasion of privacy and the erosion of their personal and professional lives.

Moreover, it's not just about the individuals targeted; it's about the erosion of social trust. When people lose confidence in the authenticity of information, it becomes more difficult to have meaningful conversations, make informed decisions, and maintain a healthy democratic society. This loss of trust can have far-reaching consequences for social cohesion, political stability, and economic prosperity.

Addressing the threat of deepfakes requires a multifaceted approach that involves technological solutions, legal frameworks, and public education initiatives. This includes investing in the development of sophisticated detection tools, creating legal safeguards to protect victims, and educating the public about the risks of misinformation.

Combating the misuse of AI necessitates the collaborative efforts of tech companies, lawmakers, researchers, and the media. The responsibility lies with the tech companies to develop and implement tools for detecting and removing deepfakes from their platforms, while the lawmakers need to put in place appropriate laws and penalties for creating and spreading malicious content. Researchers can help with the development of more effective detection methods, and the media can play a crucial role in educating the public and providing accurate information.

It's also vital to promote media literacy and critical thinking skills. Educating the public about how to identify deepfakes and assessing the credibility of sources is a crucial step in mitigating the impact of AI-generated misinformation.

This is not just a technical problem, it's a societal challenge. If we can take a stand against this issue, the future of our digital interactions will be safe.

Article Recommendations

- Bollywood More Where To Watch Download Movies Online

- Unveiling Aagmal Your Guide To The Platform More

Detail Author:

- Name : Mr. Emerald Wyman

- Username : rodriguez.fredrick

- Email : heaney.leta@gmail.com

- Birthdate : 1982-02-12

- Address : 8710 Schamberger Loaf South Madisyn, AK 68288-9041

- Phone : +1-364-440-8667

- Company : Stiedemann-Quigley

- Job : Paving Equipment Operator

- Bio : Sit molestias quos velit ea vel molestias accusantium. Quisquam asperiores hic molestiae. Qui dolor quod voluptas dolore et qui. Qui ipsam nisi necessitatibus blanditiis at quos qui recusandae.

Socials

linkedin:

- url : https://linkedin.com/in/paul.conn

- username : paul.conn

- bio : Ex inventore et quo officiis velit mollitia.

- followers : 1773

- following : 2426

instagram:

- url : https://instagram.com/paul_id

- username : paul_id

- bio : Et omnis sit ipsam neque porro. In aut sunt sequi est. Quo tempore consequatur quidem quod.

- followers : 6998

- following : 1712

facebook:

- url : https://facebook.com/conn1971

- username : conn1971

- bio : Labore explicabo non modi aperiam.

- followers : 4805

- following : 1074